Introduction

Many cloud providers, including the big ones, have been aggressively marketing VM instances powered by ARM-based processors from Ampere Computing. Those instances are usually priced at a (sometimes much) lower per-vCPU price than traditional x86 vCPUs (AMD or Intel), while promising to deliver near the same performance (depending on the workload). It’s worth mentioning that Oracle invested in Ampere Computing early on 2019, which helps explain their more aggressive marketing and their free tier offer on their cloud platform (which I will detail later on).

Applications that benefit from high clock frequencies would be ideal candidates for these Ampere CPUs, given their single-threaded, high frequencies architecture. Another advantage, targeted specially at cloud providers (and some on-premises datacenters), is the high core-to-package ratio, with the Ampere Altra Max featuring up to 128 cores in a single CPU package – all that while promising much lower power consumption than an AMD or Intel CPU with the same core count (which would typically require two or more CPU packages).

One application that fits this scenario almost perfectly is Redis. But would we get more performance per dollar (or, in my case, per Brazilian real :) ) than running the same workload on a similar (in terms of vCPU count and amount of RAM) x86 platform?

Let’s find out!

Test setup

- Operating system: Oracle Linux 8.6, with kernel 5.4.17-2136.309.5.el8uek (UEK)

- Cloud provider: Oracle Cloud Infrastructure (OCI)

- Hardware specs - ARM: VM.Standard.A1.Flex instance (Ampere Altra CPU), with 2 OCPUs (equals to 2 vCPUs in the guest) and 8 GB of RAM

- Hardware specs - x86: VM.Standard3.Flex instance (Intel Xeon Platinum 8358 CPU), with 1 OCPU (equals to 2 vCPUs in the guest, due to hyperthreading) and 8 GB of RAM

- Redis: version 5.0.3 (package

redis-5.0.3-5.module+el8.4.0+20382+7694043a, from official OL8 repo) - Benchmarking tool: redis-benchmark 5.0.3, from Redis’ RPM package

- Benchmarks:

- Default settings (100.000 requests, 50 parallel clients, 2 bytes SET/GET data size, no pipelining)

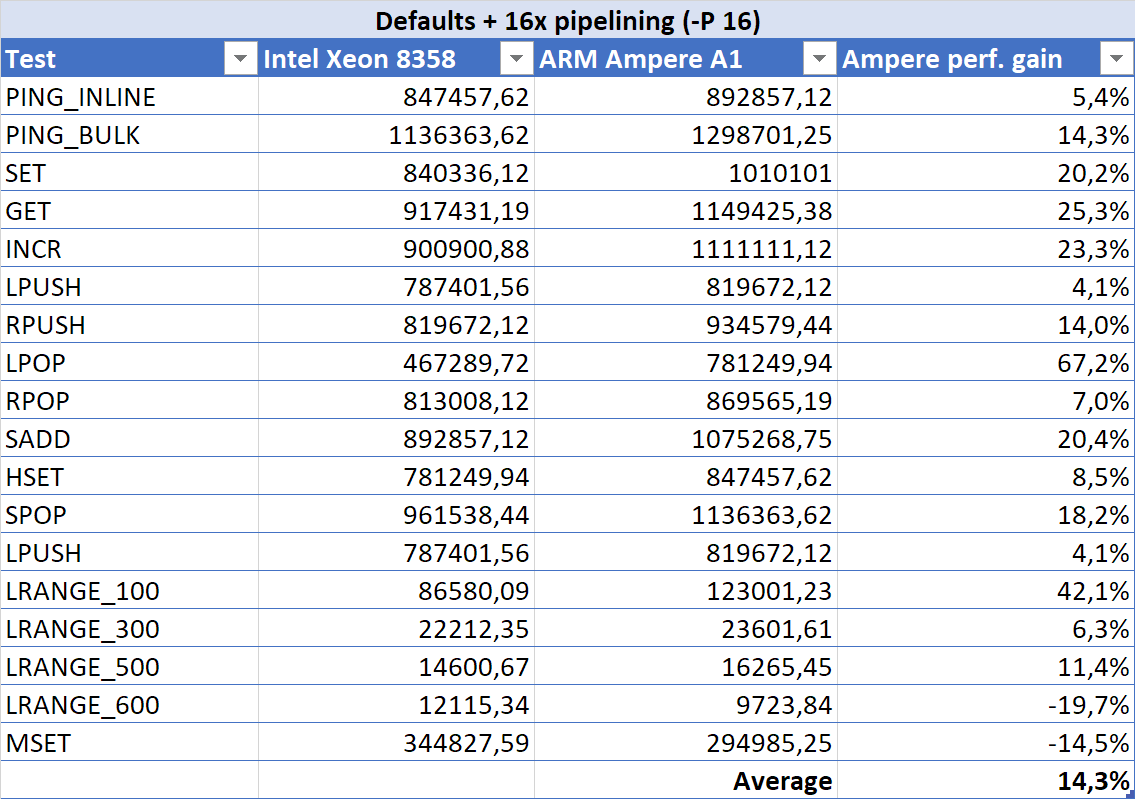

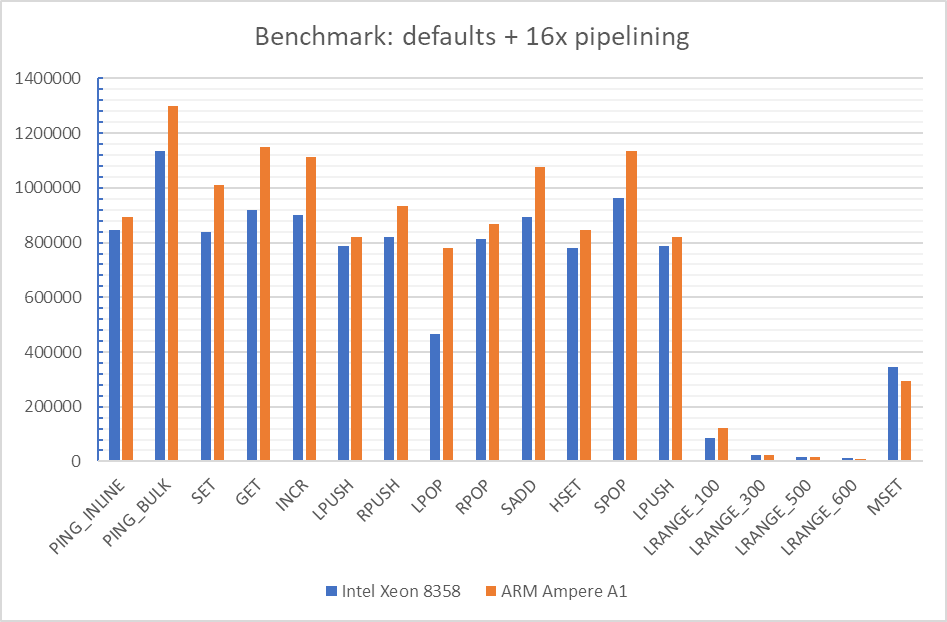

- Default settings + 16 pipelined requests (

-P 16) - 200.000 requests, 100 parallel clients, 16 bytes SET/GET data size (

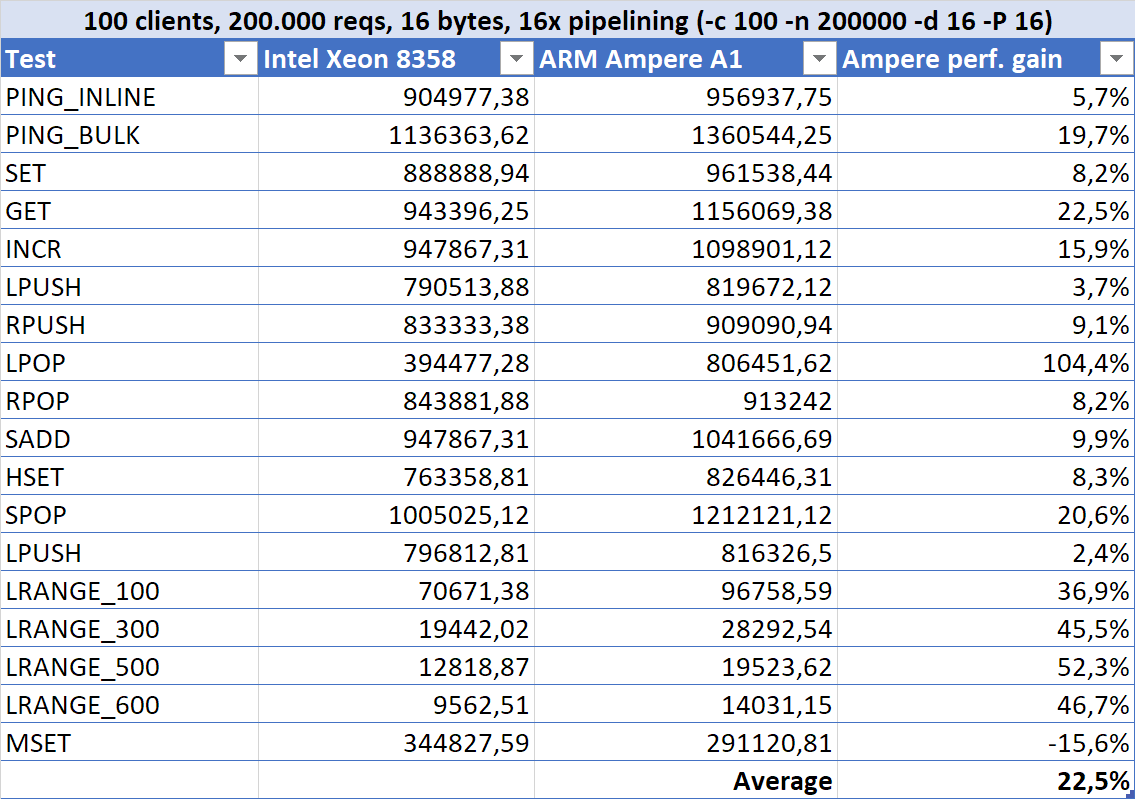

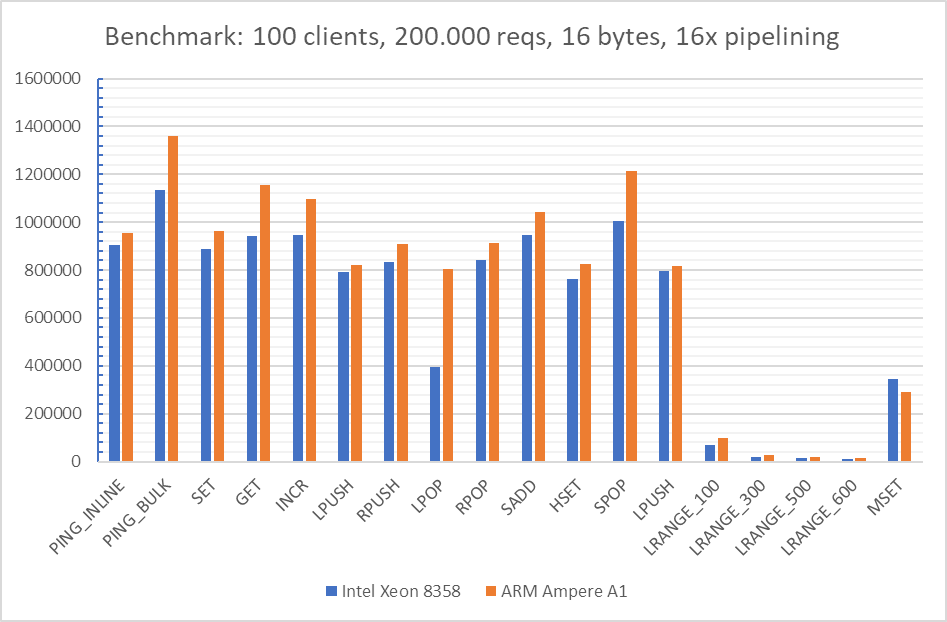

-c 100 -n 200000 -d 16) - 200.000 requests, 100 parallel clients, 16 bytes SET/GET data size + 16 pipelined requests (

-c 100 -n 200000 -d 16 -P 16)

Remarks on the test setup

I chose Oracle Cloud Infrastructure mostly because I have a lot of free credits remaining, but also because they are more aggressively marketing the Ampere Altra CPUs. I also liked how clearly the prices are stated, which made it easier for me to draw the comparisons between the two instances.

Oracle Linux was chosen mostly because it’s the standard Linux distro on OCI (you don’t say!), and is fully compatible with Red Hat Enterprise Linux (RHEL) and derivatives (like CentOS, AlmaLinux etc.), with the only difference being the optional “Unbreakable Enterprise Kernel” (UEK), which I used for these tests. Finally, since most corporate systems run on RHEL or on a RHEL-like distribution (source: trust me! :)), and this study will be specially useful for enterprises with large Redis’ deployments, it made even more sense to use OL8.

There is one final remark that is very important to understand the performance/price comparisons: the concept of OCPU in Oracle Cloud Infrastructure and how they are priced. On most cloud providers, you are charged for vCPUs – but they either don’t say, or bury it deep in their docs, if a vCPU is equal to a full CPU core, or to a CPU thread (when the physical CPU support threads and they are enabled, of course). Since a CPU thread doesn’t deliver the same performance as a full CPU core for most (all?) workloads (DYOR if you are unaware of this fact), it can become a problem.

OCI simplifies that by pricing their instances per full CPU cores, which they call an OCPU. So, if you are deploying an instance with a (for example) Intel Xeon Platinum 8358 CPU, you will see two virtual CPUs on the guest OS for each OCPU. On the other hand, if you are deploying an instance with an Ampere Altra CPU, which doesn’t have threads, each OCPU will be equal to one virtual CPU on the guest OS. This article on the OCI Blog has more details about that.

For this test, I deployed the Intel instance with one OCPU and the Ampere instance with two OCPUs, which translated to two virtual CPUs on the guest OS for each. Since redis-benchmark runs on only one CPU core, and the instances were entirely dedicated for those tests (nothing else besides the minimum required to boot the OS was running), it shouldn’t influence the benchmark results – but when we compare the performance/price, it does matter, as we’ll soon see.

Benchmark results

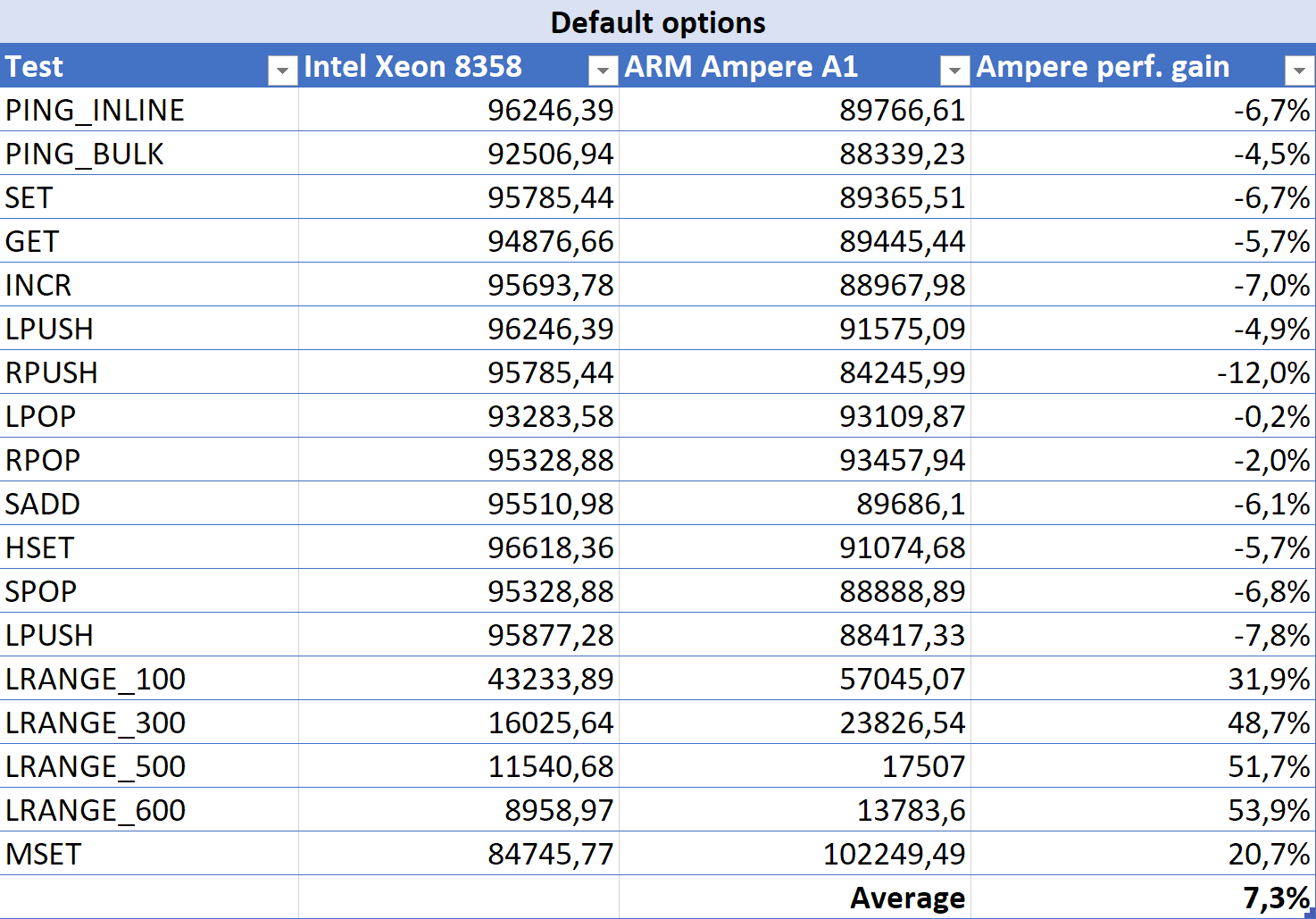

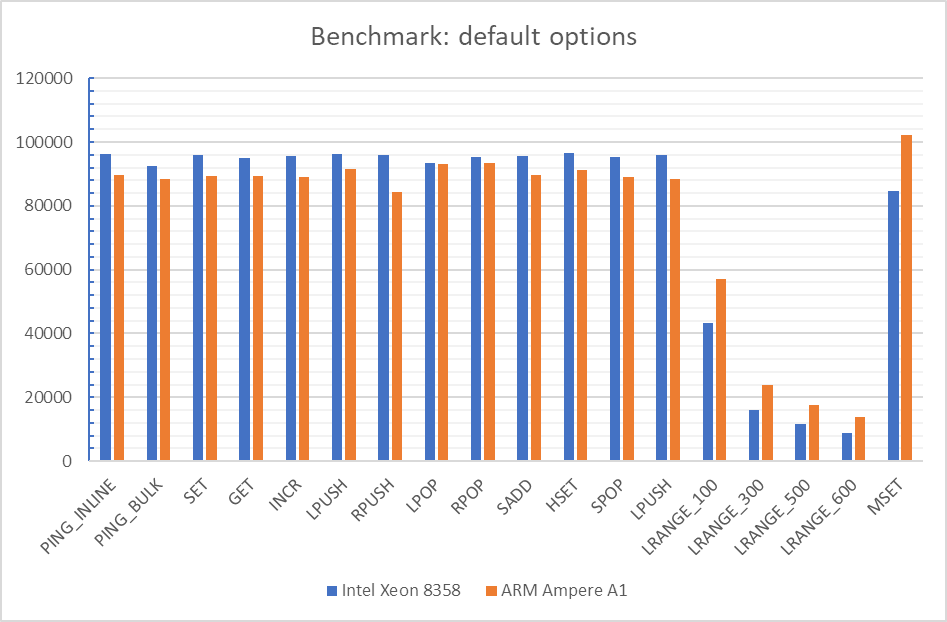

- redis-benchmark with default options:

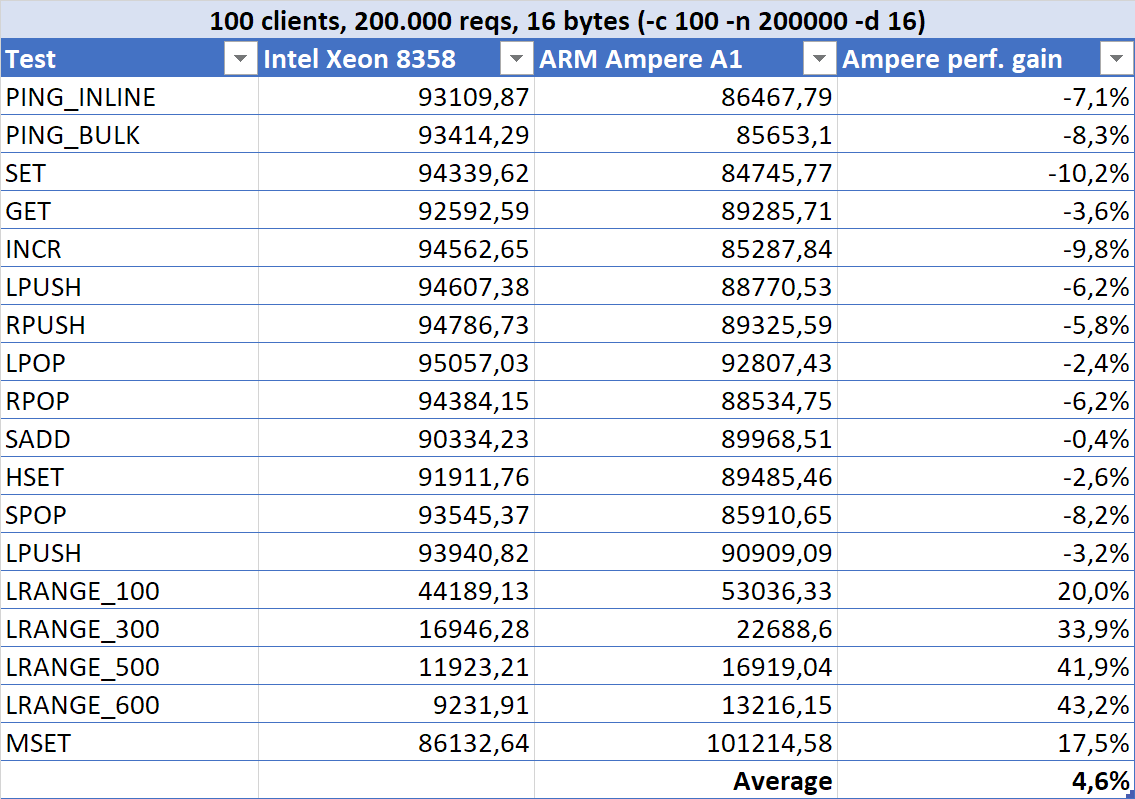

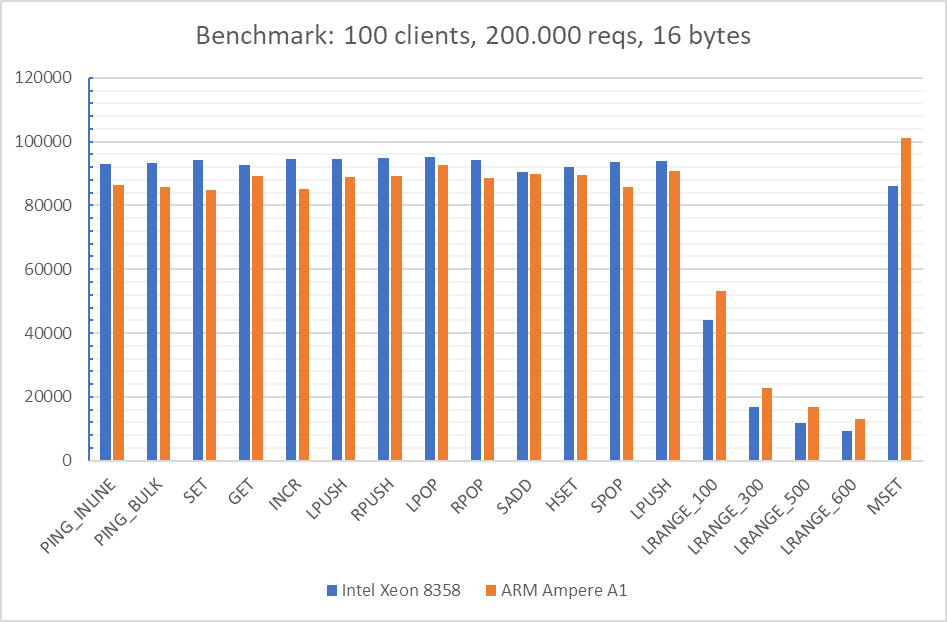

- redis-benchmark with 100 clients, 200.000 requests, and 16 bytes SET/GET data size:

- redis-benchmark with default options and 16 pipelined requests:

- redis-benchmark with 100 clients, 200.000 requests, 16 bytes SET/GET data size and 16 pipelined requests:

Cost-benefit analysis

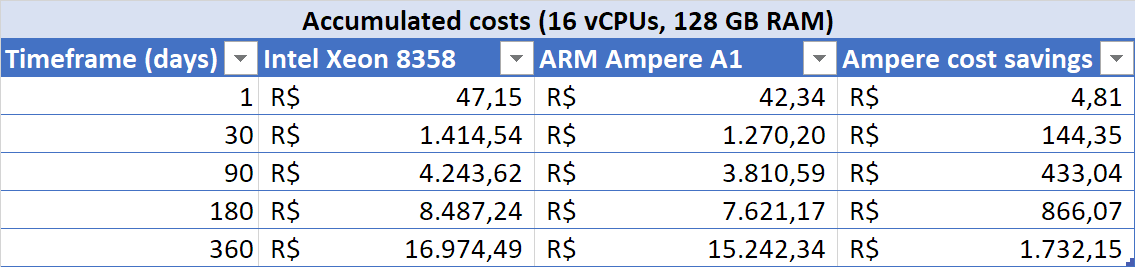

We need to consider various scenarios and plot the accumulated costs of each one in a longer timeframe (at least one year), to better understand the financial impact they bring. And remember the whole OCPU vs. vCPU story? Well, now it becomes critical. If we compare each instance on a per vCPU basis, we have this:

- Intel Xeon 8358, vCPU/hour: R$ 0,06263

- Ampere Altra, vCPU/hour: R$ 0,0501

Ampere is 25% cheaper than Intel, but there’s more: on the Ampere platform, 1 OCPU = 1 vCPU, but on the Intel platform, 1 OCPU = 2 vCPUs, because each core has hyperthreading. Since on OCI the minimum we can get is 1 OCPU (be it with hyperthreading or not), we will actually be charged R$ 0,12526/hour for the Intel instance, even if we need only one vCPU (but ideally you won’t want that: get a full core whenever possible). So perhaps we should lay out the prices like this:

- Intel Xeon 8358, OCPU/hour: R$ 0,12526

- Ampere Altra, OCPU/hour: R$ 0,0501

I will compare vCPU-to-vCPU prices in most scenarios, but in one of them I’ll compare OCPU-to-OCPU (as if the hyperthreads were superfluous, since that depending on the workload, it will “almost” be the case).

RAM prices are the same for both platforms: R$ 0,00752/GB, which I will add to the final prices of each scenario.

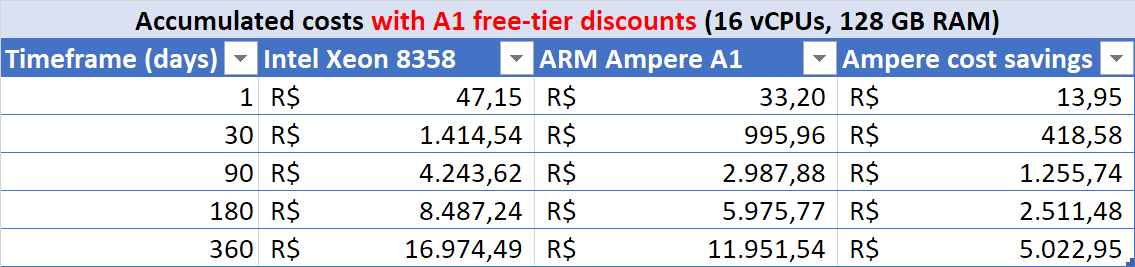

Another very important factor to consider is OCI’s free tier: each tenancy gets the first 3.000 OCPU hours and 18.000 GB hours per month for free to create Ampere A1 Compute instances using the VM.Standard.A1.Flex shape (equivalent to 4 OCPUs and 24 GB of memory). I will draw a scenario with that taken into consideration as well: a 16 OCPUs Ampere Altra instance will be billed as a 12 OCPUs instance, and 128 GB of RAM will be billed as 104 GB.

Finally, we also need to consider the results of each benchmark: the Ampere Altra instance outperformed its rival in all of them, if we consider the average of the individual tests inside each benchmark. The best result will be used as a weight for the prices: let’s say that the Ampere Altra was 22,5% faster, on average, than the Intel Xeon on the best benchmark; I’ll then apply a discount of 22,5% to the Ampere Altra OCPU prices.

Accumulated costs showdown

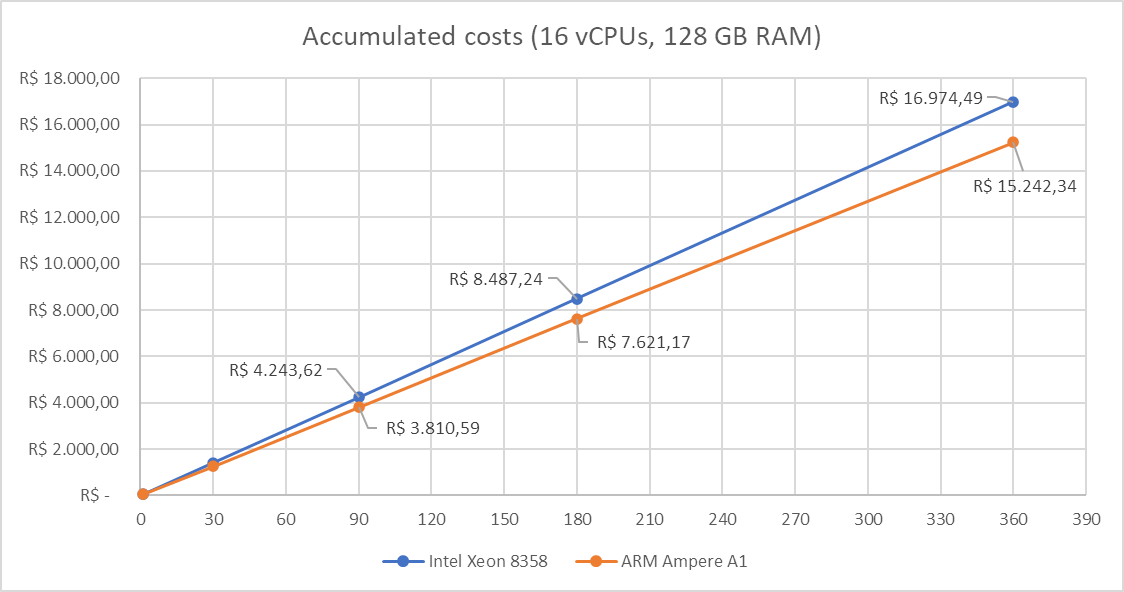

- Accumulated costs for 16 vCPUs and 128 GB of RAM:

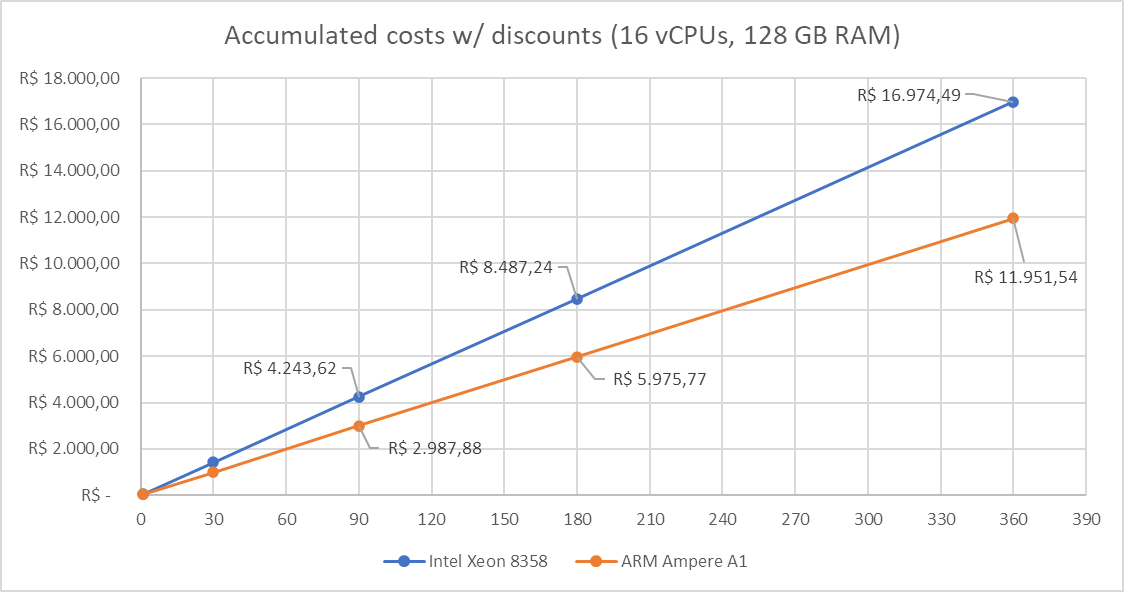

- Accumulated costs for 16 vCPUs and 128 GB of RAM, applying the A1 free tier discounts (counting 12 vCPUs and 104 GB of RAM for Ampere):

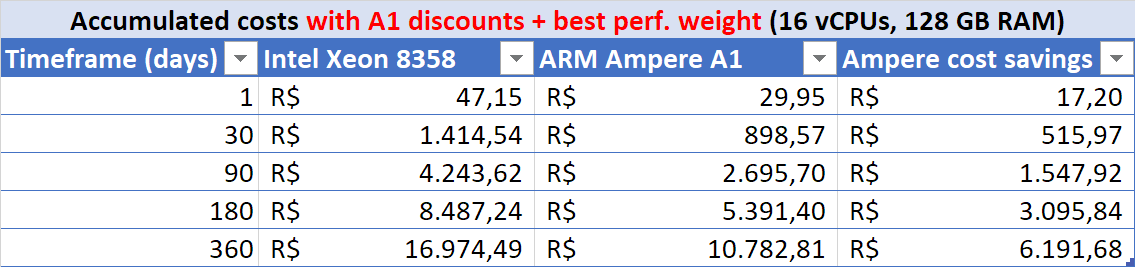

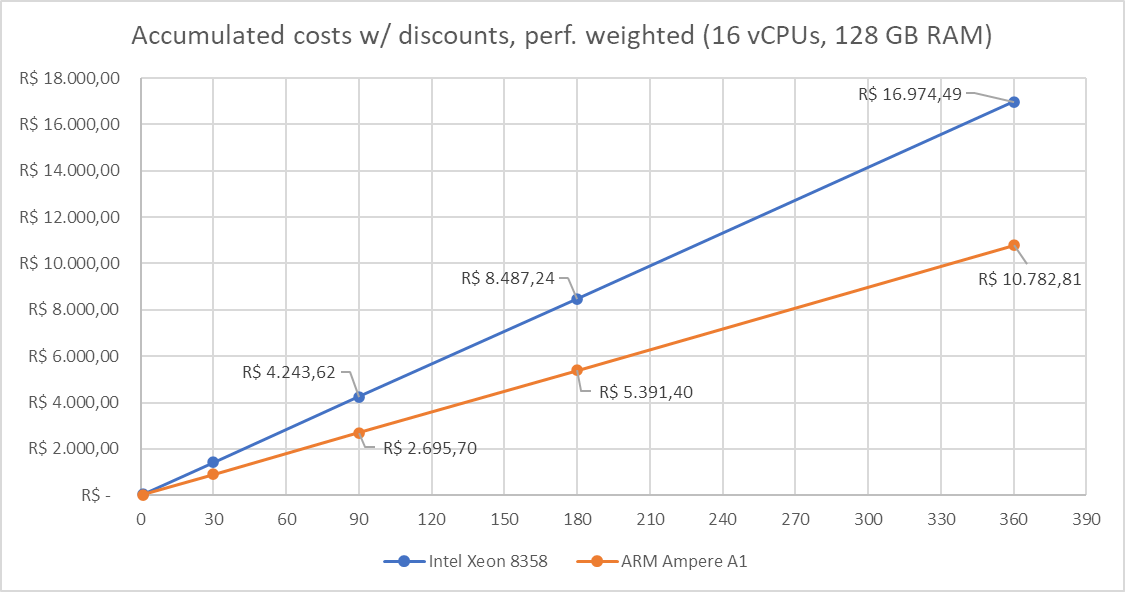

- Accumulated costs for 16 vCPUs and 128 GB of RAM, applying the A1 free tier discounts plus a 22,5% discount on Ampere’s vCPU pricing (weighting in the best performance gain achieved on the benchmarks):

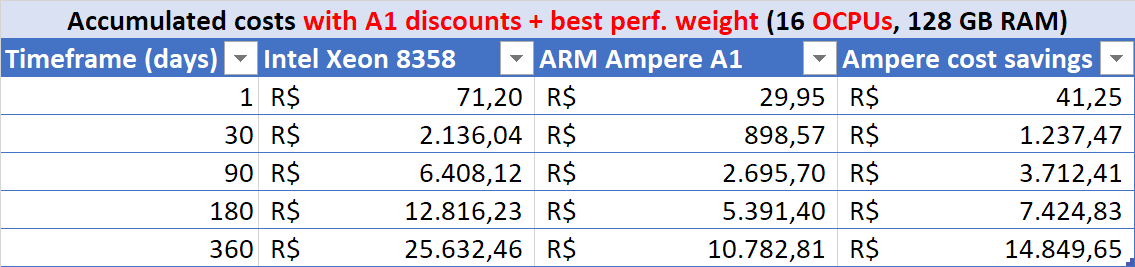

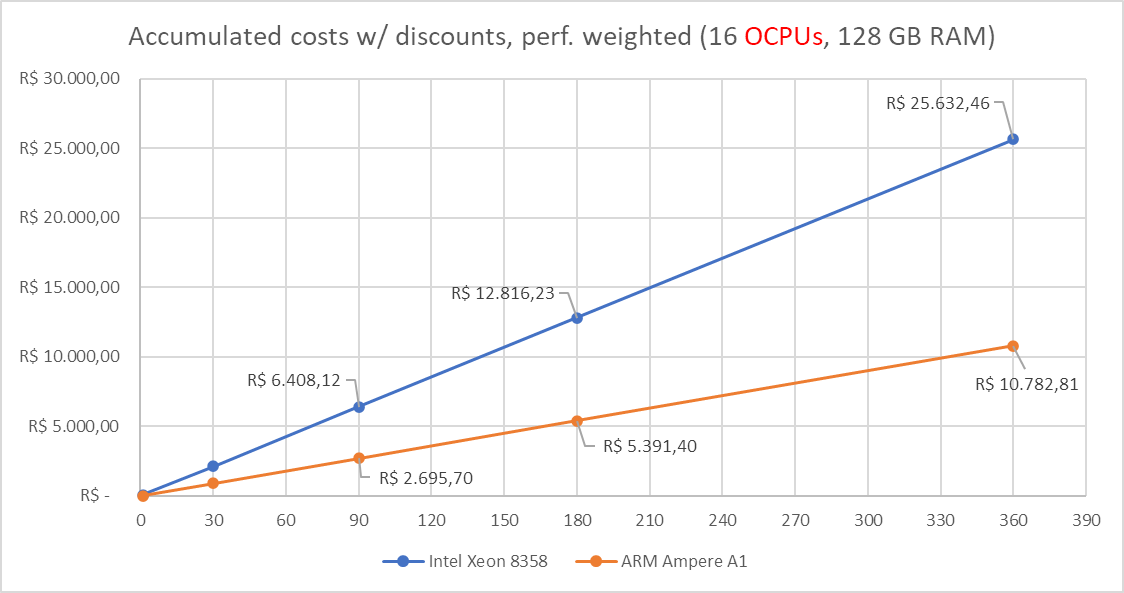

- Accumulated costs for 16 OCPUs and 128 GB of RAM, applying the A1 free tier discounts plus a 22,5% discount (performance weight). Notice how the Intel platform becomes much more expensive when we compare OCPUs to OCPUs, although on that platform we get an extra vCPU per OCPU (but not a real CPU core performance-wise, as I explained before; read more about that on the conclusions):

Conclusions and future work

In the best case scenario (for Ampere Altra), we were able to save R$ 14.849,65 in one year, for a 16 OCPUs instance with 128 GB of RAM.

Pipelining requests seems to be very beneficial for the Ampere Alta CPU (more than for the Intel Xeon CPU).

Real world scenarios may be somewhat different, so it may be important to perform more specific benchmarks before making a choice, taking into account things like network latency and bandwidth, other processes competing for resources on the server etc.

Cost-benefit analysis scenarios considering each CPU thread as 0,5 of a full core can be interesting. In that case, we would need to pay for 1,5 Ampere Altra OCPUs for each Intel Xeon OCPU.

It would be useful to perform this whole study with AMD CPUs, instead of Intel, as they usually offer a slightly better cost-benefit (again, depending on the workload).

Performing this whole study on other cloud providers that also offer the Ampere CPU families can yield very different results, be it for different pricing models and/or different performance characteristics of the physical hardware and hypervisor.