Introduction

Recently, while browsing the web looking for some interesting stuff, I stumbled upon a project called WANem, which aims to simulate WAN links by just booting a VM, configuring the network interfaces and setting up the WAN link parameters in a nice web UI. It can be very useful for studying Computer Networks topics, such as transport protocols, TCP congestion algorithms, resiliency against delays and losses etc. It may also help some QA tests, specially when you need to check how an application behaves in certain WAN scenarios.

So, I decided to try it and have some fun.

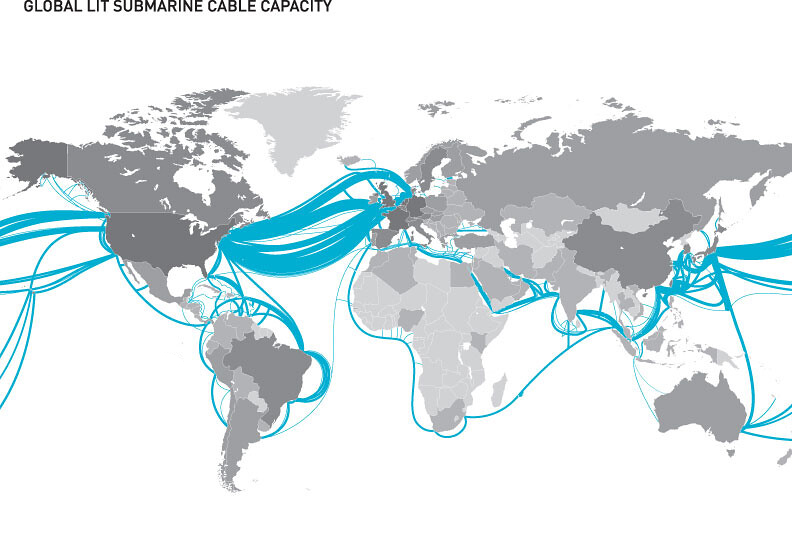

Simulated scenario

Since the WANem ISO is ancient by now (it’s based on Knoppix 5.3.1, with kernel 2.6.24), you need to make sure the VM you create for it is fully compatible, specially regarding the NICs. My scenario is as follows:

- Two virtual switches, one being reachable by the host (

switch-01), and another one isolated (switch-02). - WANem VM (

wanem-vm): connected to both switches. - Linux VM 1 (

linux-vm-01): connected toswitch-01only. - Linux VM 2 (

linux-vm-02): connected toswitch-02only.

The idea is to have wanem-vm route packets between linux-vm-01 and linux-vm-02, applying whatever traffic shaping we want. It’s important that wanem-vm is also reachable by the host, as we need to access its web UI to configure it, hence the connection to a switch that is also reachable by the host.

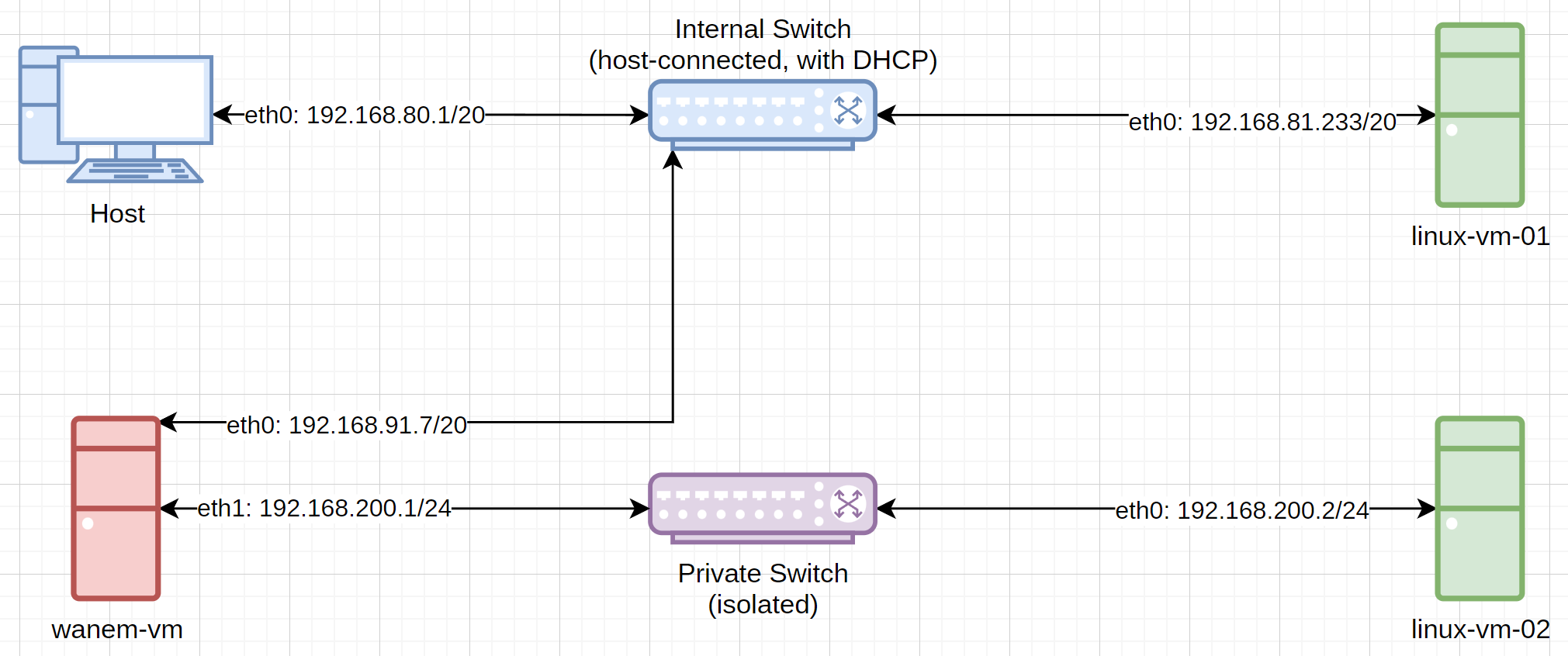

The diagram below summarizes this scenario:

Creating and configuring the VMs

Virtual Switches

Create the two virtual switches, as described before. Make sure one of them is reachable by the host, and the other one is fully isolated. You can enable a DHCP server or not; in my case, I used DHCP only for the first switch (switch-01).

WANem VM

Create a VM with 1 vCPU and 1 GB of RAM (512 MB may suffice). Use the WANem ISO as the boot device (no need to create a virtual disk). Make sure you attach two compatible NICs, one to each virtual switch.

Linux VMs

I used Oracle Linux 8, but any distro should work. I gave 2 vCPUs and 1 GB of RAM to each. The first Linux VM (linux-vm-01) should be attached to only one of the switches, while linux-vm-02 should be attached only to the other one. Some important remarks:

- Install whatever testing utilities you may want beforehand (with the VMs being able to reach the Internet), such as iPerf, IPTraf, TCPDump etc.

- After configuring the IP addresses, disable anything that may interfere with the networking, such as NetworkManager, Systemd-firewalld, Systemd-networkd etc. We don’t want this stuff messing with our configuration.

Setting up the IPs and routes

wanem-vm

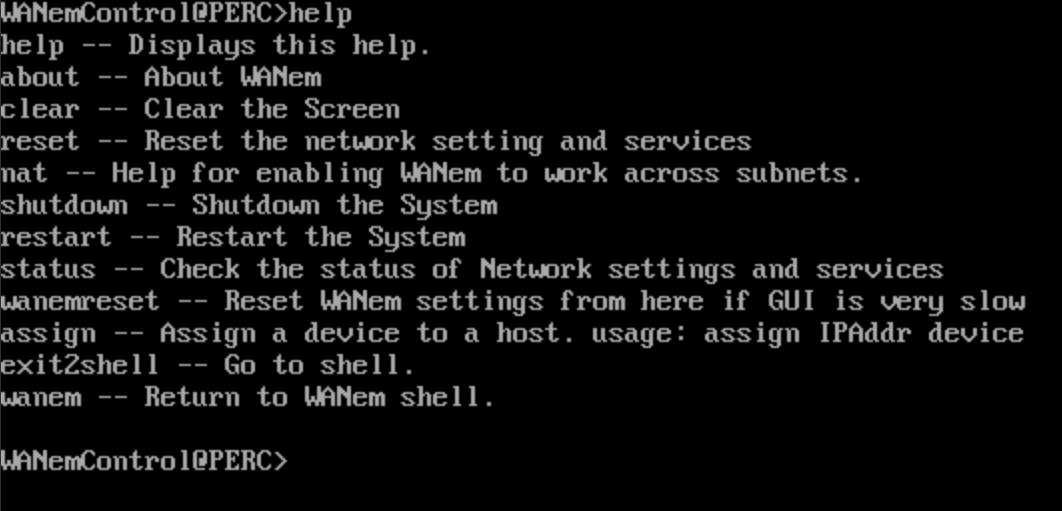

After the first boot, we’ll be presented with the WANem shell:

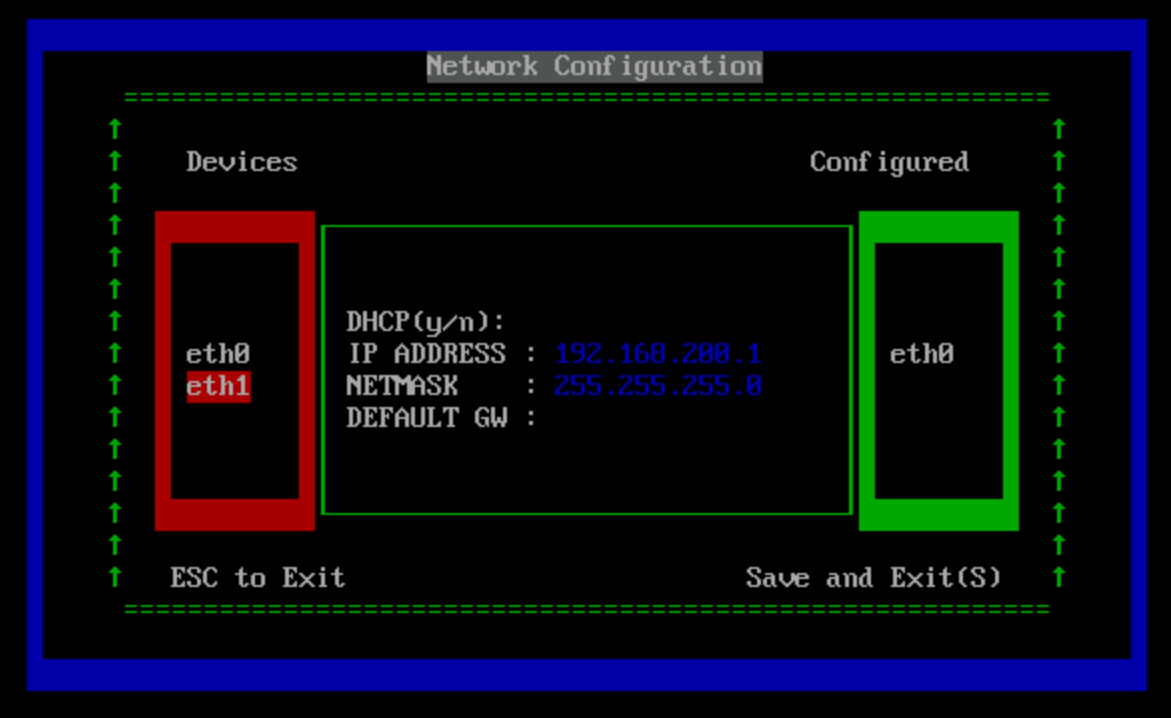

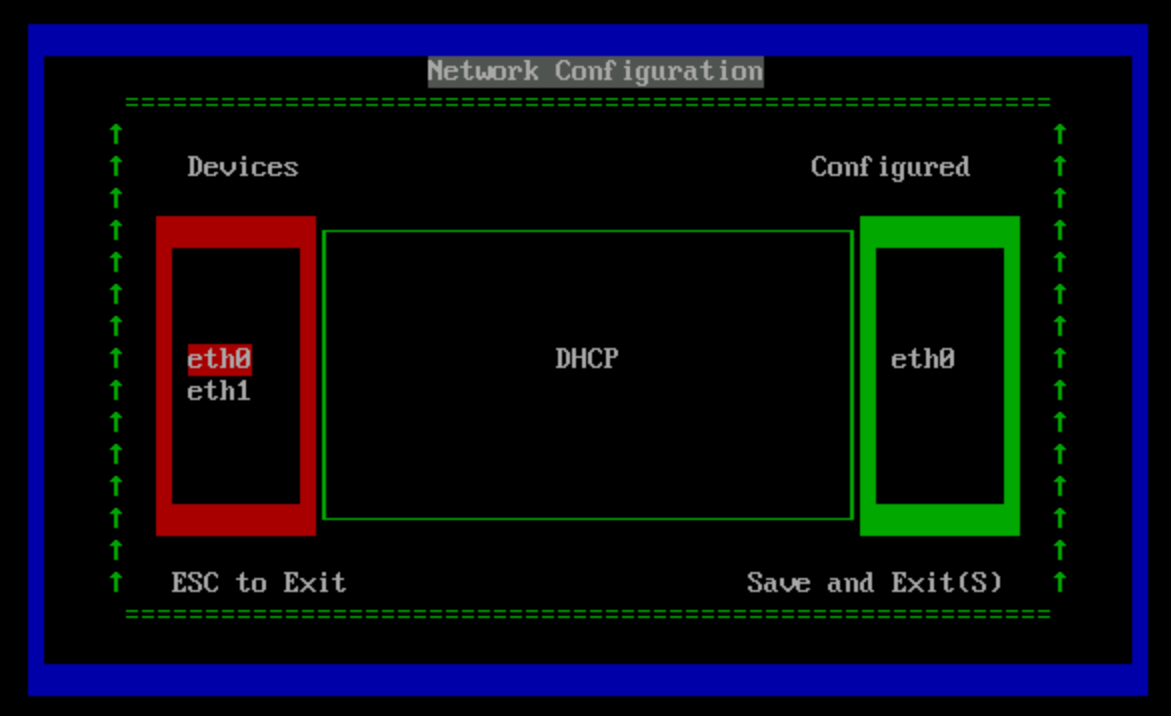

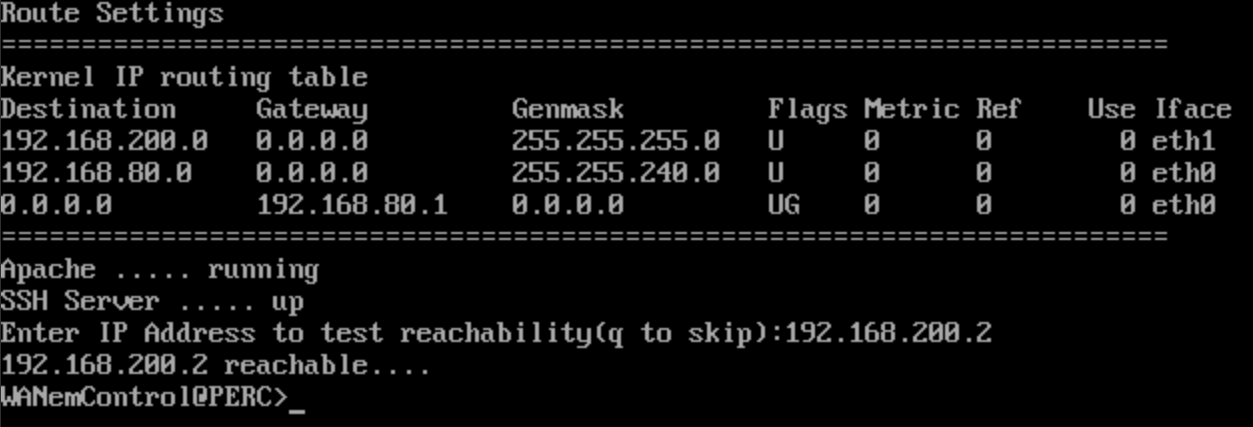

Type reset to reconfigure the NICs: eth0 should be attached to switch-01, getting an IP through DHCP; and eth1 should be attached to switch-02, needing manual configuration. Refer to the following images:

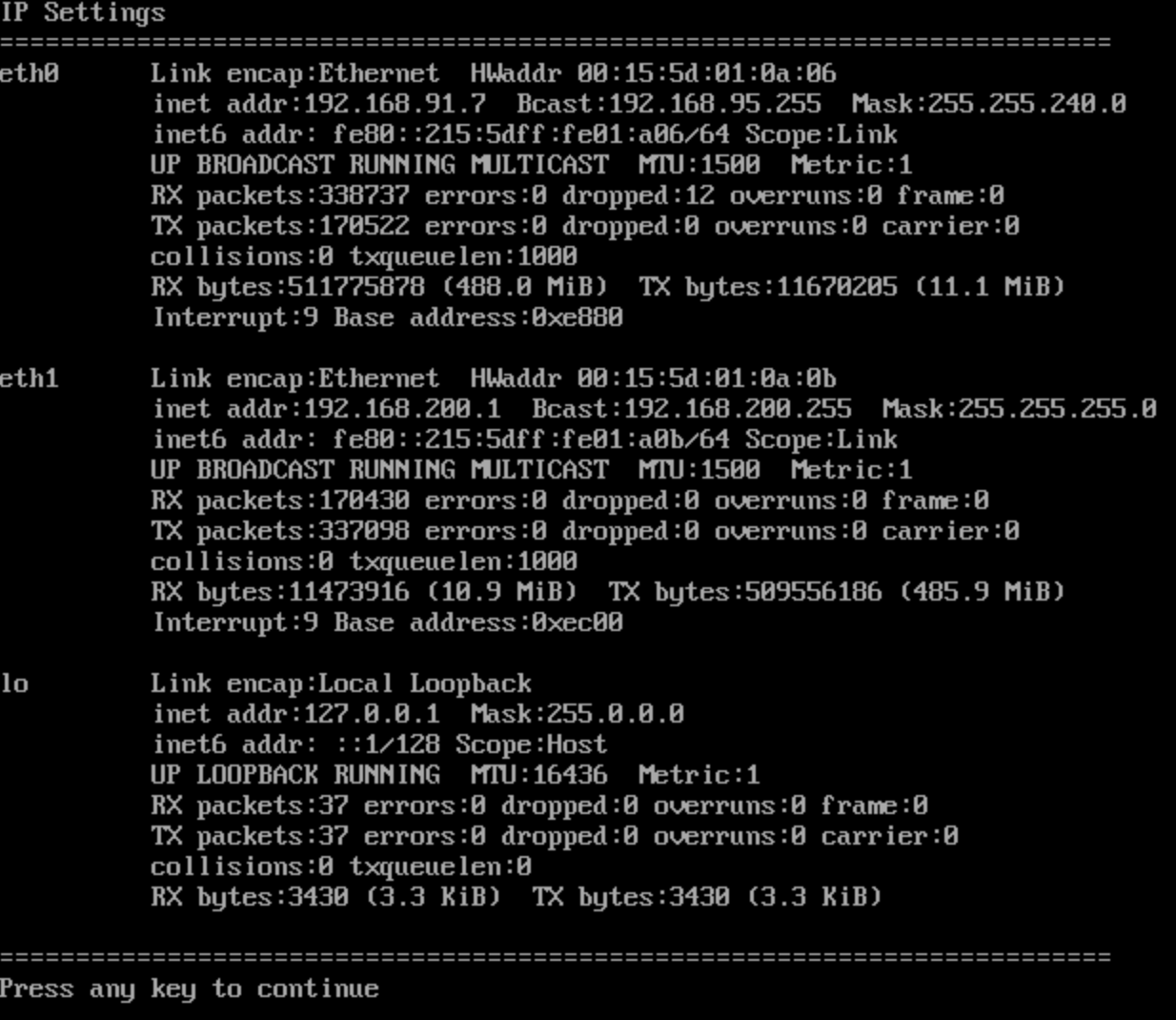

You can then confirm the status by typing status at the WANem shell:

linux-vm-01

This VM will be attached to switch-01, and as such it will get an IP address through DHCP. We now need to configure the route to linux-vm-02 via wanem-vm:

| |

linux-vm-02

This VM will be attached to switch-02, and as such we’ll need to manually assign an IP address to it:

| |

We now need to configure the route to linux-vm-01 via wanem-vm:

| |

At this point we should be able to ping linux-vm-02 from linux-vm-01 and vice-versa.

Configuring the WAN link

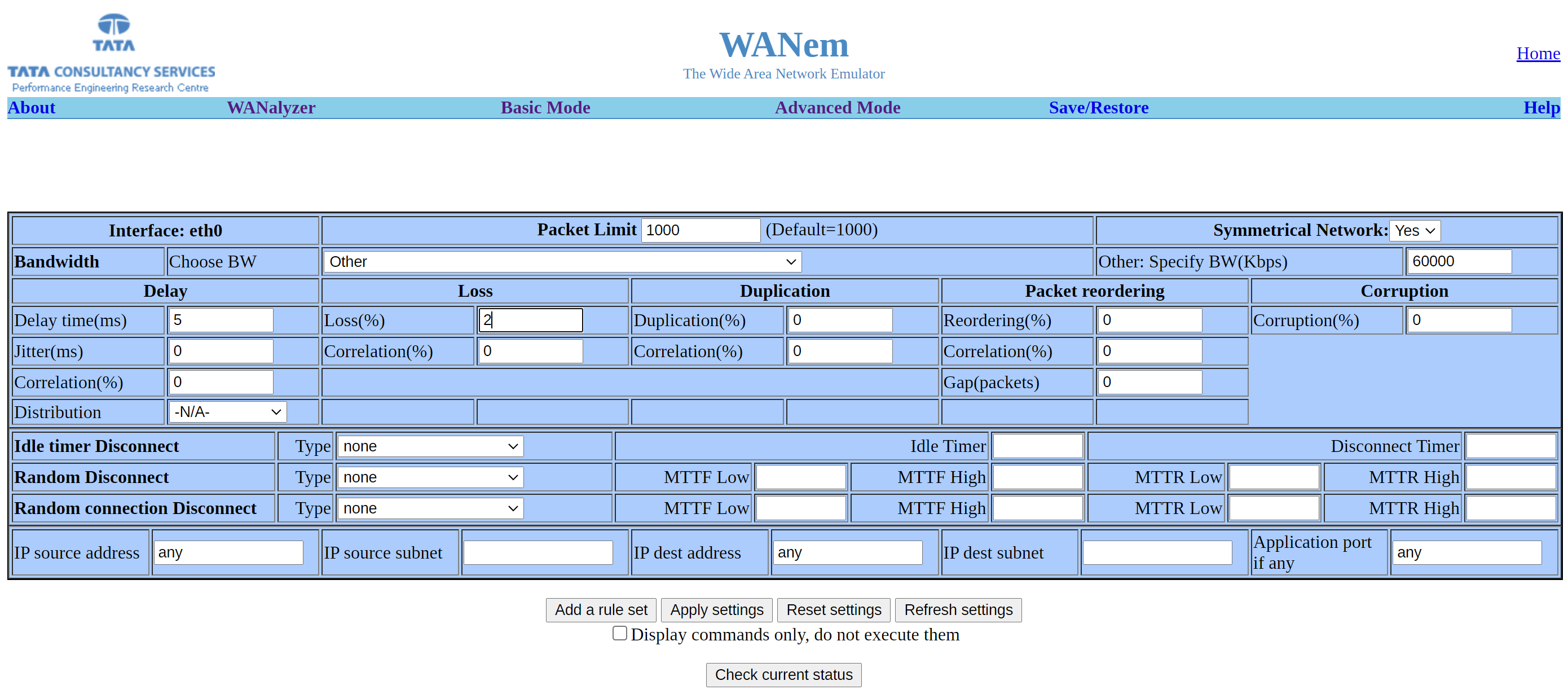

Access the WANem web UI at http://192.168.91.7/WANem, and go to Advanced Mode. From that page, we can configure multiple parameters for each NIC (eth0 and eth1, in this case). For eth0, I’ve set a bandwidth of 60000 Kbps, 5 ms delay, and 2% loss:

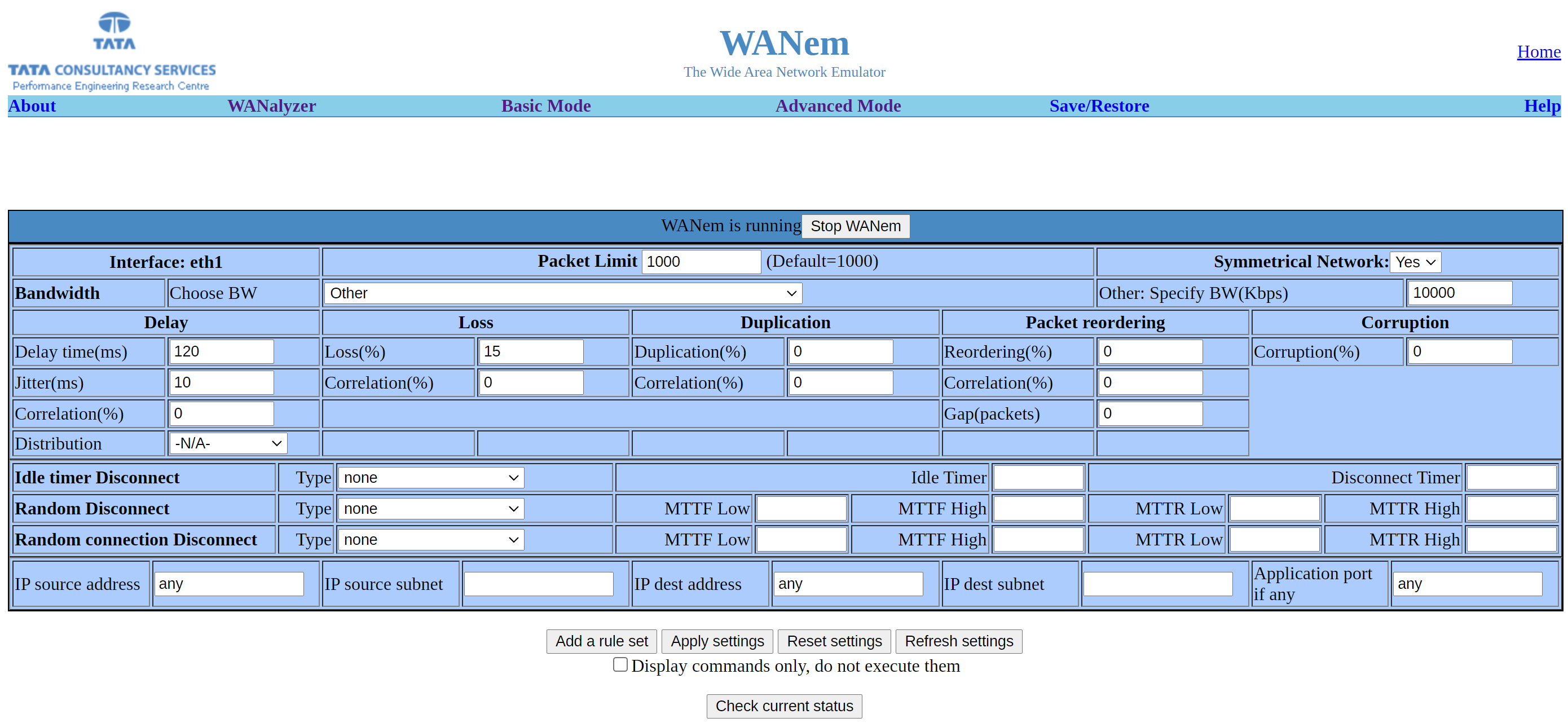

And for eth1, I’ve set a bandwidth of 10000 Kbps, 120 ms delay, 10 ms of jitter, and 15% loss. Yeah, a crappy link. :) This difference between eth0 and eth1 will allow us to see some interesting things.

Testing the WAN link

I chose iPerf 3 and flood pings to test the WAN link simulated by WANem, as they are pretty straightforward to use and can give us some useful insights.

First, before configuring the WAN link, with just plain routing between linux-vm-01 and linux-vm-02, we got about 50 Mbps of bandwidth between the VMs, with some losses along the way – probably the ancient Knoppix with kernel 2.6 couldn’t give us more (as the hardware resources weren’t constrained):

| |

The flood ping went fine, without any losses, and with about 0.5 ms delay:

| |

Now, with the WAN link configured as described in the previous section, we got a very different result:

| |

Notice how we had many losses along the way, with an average 126 ms RTT. In iPerf we can see how it translates to a very low bitrate and very small TCP congestion window. Also, the bitrate was much less than the 10000 Kbps configured on eth1: remember the losses are in play in this scenario (the way the bitrate and congestion window grow and shrink regularly also tells a lot).

Don’t believe me? Well, let’s remove the loss configured in WANem, leaving the rest untouched:

| |

Ah-ha, now we can reach the wire speed of wanem-vm’s eth1. Well, sort of: there are still some TCP retransmissions, probably because of a buffer bloat in WANem, specially given the asymetry of the two links (eth0 and eth1).

Conclusions

This is a pretty straightforward way to simulate WAN links, which may be very useful when we need to replicate certain scenarios to understand how applications behave (and how to fine-tune them accordingly).

As shown in the screenshots, WANem can simulate bandwidth (symmetric or asymetric), delay (with jitter), loss, duplication, packet reordering, corruption, disconnects (including at random), MTTF and MTTR; and we can even create multiple rules for different subnets. Awesome job by the team behind WANem.

I also plan to set up a similar scenario but using a plain Linux VM as a router, instead of WANem. In this case, I’ll leverage IPRoute’s Traffic Control (TC) to apply some traffic shaping policies. Perhaps I’ll even experiment with IPTables’ hash-limit extension. Stay tuned!